This started as a comment on Kevin Dorst's interesting post on how differential perception can lead to extremely biased beliefs. But it got more than a bit long, so here it is. The big theme is going to be that this is yet another case where thinking about analogies between minds and organisations can be helpful for understanding each of them.

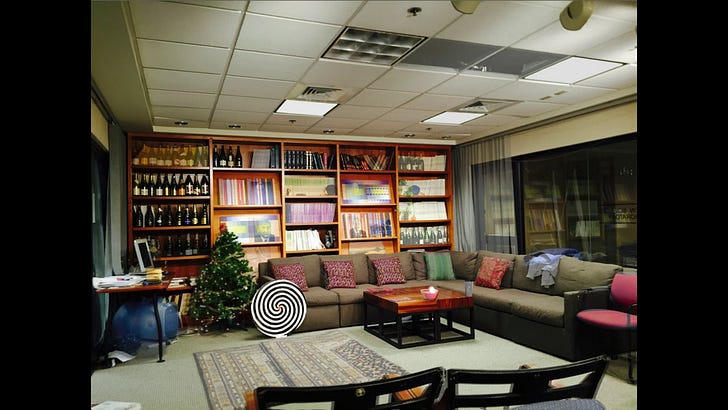

Dorst's model starts with the idea that people are more disposed to notice some kinds of evidence than other kinds. That's surely true. But I'm not sure the example he uses really shows it. Watch this video, and focus on what happens to the exercise ball:

OK, so you saw the exercise ball change, and probably didn't see a lot of other things change too. But note how carefully the video had to be staged to do that. What people tend to miss are small, gradual changes. The big picture is not that people only ever see what they are focussing on. It is that they are, as Dan Sperber and Hugo Mercier have been pointing out over a number of works vigilant.

Here's a little thought experiment to see what vigilance is like from the inside. Imagine you're walking through a very crowded area - maybe Times Square, or the corridors of a teaching building at a big university between classes. From the inside it doesn't feel like you are having singular thoughts about each of the individuals in the crowd. But if one of them was a large clown with a two foot high wig with a raised sword, you'd latch onto them straight away. At some level you must be scanning each individual in the crowd so that you can, if needed, pay attention to the person who needs most attention. In short, you don't think about each individual, but you are vigilant towards everyone.

A good model of how vigilance works would be more than a bit useful. The rough picture surely is something like this. We are constantly scanning a large field around us. In physical environments, this is often done with hearing; vision is directional, but audition is good at taking in the whole space. (This is why it's easier to trick people with videos like the one above than in real life.) But what information do we take in from this scan? There's too much information to process it all in real time. In the first instance the scan delivers something like a 1-bit signal from a handful of different directions: Either everything is fine, or look over here. If there's a sword-wielding clown, the first pass scan doesn't say what the problem is, it just says that there is a problem, and you need to attend to it now. This is, I think, a pretty good division of cognitive labour, even if it breaks down in cases where there is a bit of change, but not enough to warrant the "look over here" signal.

This way of thinking about the problem makes it sound like the kind of information management problem that has been a thread of Daniel Davies's recent substack posts, and his forthcoming book. A central question organisations have to face is how do we get the needed information to decision-makers, in a form they can use.

Historically, the limits here were technological - literally how do we move information around. But now they are psychological. General Motors could drop a 200GB file with second-by-second details of what is happening in every part of the operation on the CEO's desk every morning. But that wouldn't be much use to her. She needs the information in a form she can use.

There are two obvious ways to solve this kind of problem. First, move decisions down to a point where the person responsible only has to take in a smaller amount of information. Second, find the best ways to summarise that 200GB into a form that is useful for human decision making. One of the (many) interesting lines in Davies's posts (and, I imagine, book) is how much of the history and practice of accounting can be seen as trying to solve the second problem.

One note here for philosophers. I don't think there's very much work on philosophy of accounting. Indeed, I suspect a lot of philosophers would guffaw at the very idea of philosophy of accounting. But the question of how to extract usable information from a buzzing, blooming confusion of organisational activity seems like a very interesting philosophical question. Indeed, it's one that philosophers of science are actively working on. (A point I'll come back to below.)

Back to the human-organisation analogy. Davies notes that a big role that budgets perform is that they put constraints on when a sub-unit can send out the 1-bit signal: Everything is ok here. Keep to the budget, and you just send a simple message up the chain. Sperber-Mercier vigilance has, I think, the same kind of role. It lets the central manager, roughly the conscious self, know the most important thing there is to know about a part of the nearby environment: nothing to worry about here. And even the most resource constrained information processor can do something useful with that information, e.g., not worry about that region.

So now back to Dorst. How should we think about models for attentional bias? I have two big picture thoughts, without much idea of how to fill in the details on either.

The first is that attentional bias seems like a result of the vigilance system misfiring. One thing we seem to have some control over is raising or lowering the threshold at which we’ll be vigilant to a thing. One way to be racist is to have a vigilance system that says “Danger alert - look over here” any time it spots a Black man. I suspect what’s going in real life cases that Dorst is modeling is not simply that one attends to X and ignores Y, it’s that the threshold the vigilance system sets for X-effects and Y-effects is different.

The other is that to understand information flows in the mind, it’s worth looking at how organisations manage the flow of information, and how sometimes that can make a huge difference to what those organisations do. And then we can see how much each person acts like a particular kind of organisation.

In philosophy, the best work I know of on this question comes from philosophers of science looking at the effects of information flows within scientific communities. A lot of this work is downstream of Kevin Zollman's work on Communication Structures. For instance, in chapter 3 of The Misinformation Age, Cailin O'Connor and James Weatherall have a model of how a 'propagandist' can influence social beliefs without spreading any falsehoods, but just by selecting which truths to advertise. That looks fairly similar to Dorst's model.

What would be really useful, and which I don't know of a lot of work on, is what the optimal information distribution system is if we impose bandwidth constraints. The only thing I know is also by Cailin O'Connor - this paper of hers on how vagueness might be a natural side-effect of optimal communication given a limit on how many distinct signals can be sent. But it would be nice to know more. It seems like vigilance models, and budgetary systems, are trying to attempt to optimise given bandwidth constraints. It would be good to have formal, or experimental, work on what approaches are indeed optimal, and in what circumstances generally optimal systems might nonetheless fail.